Docker tutorial for Data Science

What is Docker?

Docker is a tool for creating and deploying isolated environments for running applications with their dependencies.

- Installation guide

- Docker Container: A container is a standard unit of software that packages up code and all its dependencies so the application runs quickly and reliably from one computing environment to another, A single instance of the application, that is live and running.

- Docker Container: Containers are isolated environments that run applications. They include everything needed to run the application, such as the code, runtime, libraries, and configuration files.

- Docker Container: Containers are lightweight because they share the host system’s operating system kernel, unlike virtual machines which each have their own OS.

A Docker container can be seen as a virtual computer inside our computer. We can it to our friends or anyone and when they start this computer and run your code they will get exactly the same results as you did.

- Docker Image : A blueprint for creating containers. Images are immutable and all containers created from the same image are exactly alike.

- An image is a read-only template used to create containers. Images contain the application code, libraries, dependencies, and tools.

- Docker images are built from a

Dockerfile, a script that contains a series of instructions on how to build the image. - Dockerfile: A text file containing a list of commands to call when creating a Docker Image.

- How to create a Docker Image? Link

Why Docker?

- Reproducibility: Similar to a Java application, which will run exactly the same on any device capable of running a Java Virtual Machine, a Docker container is guaranteed to be identical on any system that can run Docker. The exact specifications of a container are stored in a Dockerfile. By distributing this file among team members, an organization can guarantee that all images built from the same Dockerfile will function identically. In addition, having an environment that is constant and well-documented makes it easier to keep track of your application and identify problems.

- Isolation: Dependencies or settings within a container will not affect any installations or configurations on your computer, or on any other containers that may be running. By using separate containers for each component of an application (for example a web server, front end, and database for hosting a web site), you can avoid conflicting dependencies. You can also have multiple projects on a single server without worrying about creating conflicts on your system.

- Security: With important caveats (discussed below), separating the different components of a large application into different containers can have security benefits: if one container is compromised the others remain unaffected.

- Docker Hub: For common or simple use cases, such as a LAMP stack, the ability to save images and push them to Docker Hub means that there are already many well-maintained images available. Being able to quickly pull a premade image or build from an officially-maintained Dockerfile can make this kind of setup process extremely fast and simple.

- Environment Management: Docker makes it easy to maintain different versions of, for example, a website using nginx. You can have a separate container for testing, development, and production on the same Linode and easily deploy to each one.

- Continuous Integration: Docker works well as part of continuous integration pipelines with tools like Travis, Jenkins, and Wercker. Every time your source code is updated, these tools can save the new version as a Docker image, tag it with a version number and push to Docker Hub, then deploy it to production.

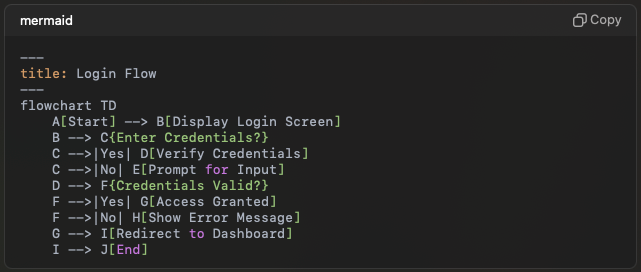

Creating a Docker container given an image id.

Get the image id from the below command

docker imagesSelect any one image id and run the below command to map the docker

docker run -itd -v /pwd/:/workspace/ -p xyzw:xyzw --name=any_name image_iddocker runis the command that takes a Docker Image and creates a container from it.- Mounting your working file system

-v :Maps your current working folder (where you execute thedocker runcommand), denoted as/pwd, to a/workspacefolder inside the container's virtual file system. - Port forwarding

-pThis argument exposes the xyzw port. In essence, it means that after you run this on a computer, your container will be accessible viahttp://{computer-ip}:xyzw - xyzw can be any port (unless that port is occupied)

The above command returns a container id, Run below command to run the container.

docker exec -it container_id bashExample:

1. Running as a root user(base) swatimeena@Swati’s-MacBook-Pro ~ % sudo su2. Get the docker imagessh-3.2# docker imagesREPOSITORY TAG IMAGE ID CREATED SIZEfedora latest a368cbcfa678 3 months ago 183MBproject_1 latest cbb0d901ffb3 3 months ago 5.57GBhello-world latest bf756fb1ae65 9 months ago 13.3kB3. Select one image_id (Choosing bf756fb1ae65)4. Mapping the containersh-3.2# docker run -itd -v /home/:/home/ -p 1220:1220 --name=mlprojects bf756fb1ae65(Replace name=any custom name and xyzw can be any port)5. Get the container_id sh-3.2# docker ps -a or sh-3.2# docker psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES09a50fd07b8b bf756fb1ae65 “/hello” 12 seconds ago Exited (0) 12 seconds ago mlprojects(Check the name or if you are not using name argument then check the status, it must be a few seconds ago ust after making the container, Copy the continer id)5. Run the containersh-3.2# docker exec -it 09a50fd07b8b bash6. Install all dependencies inside the container and enjoy :)root@d8a8d3523dc0:/#

Running jupyter inside the docker container:

Run the container using

docker exec -it container_id bash

or

nvidia-docker exec -it container_id bashInstall jupyter in the container

pip install jupyterRun the notebook on the same port, docker container was exposed to i.e. xyzw

jupyter notebook — ip=0.0.0.0 — port=xyzw — no-browser — allow-root &Run the jupyter notebook in your local browser

Open browser and run remote_ip:xyzw and enter tokenTo stop the notebook

jupyter notebook stop xyzwStop the jupyter notebook running on xyzw port

Running python scripts inside the docker container:

Start/Run the container:

docker exec -it container_id bash

or

nvidia-docker exec -it container_id bashOnce you are inside the container, navigate to the folder where you have your python script using cd command and run:

python script_name.py(You need to install all the necessary packages and dependencies inside the container first, using something like pip)

Other Docker commands

To see all the running docker containers

docker psAll docker containers

docker ps -aStart the container, if the below command gives an error or failed to start the container

docker exec -it container_id bashRun

docker start container_id(No need to make a new container with all the dependencies, restart the container using the above command)

If the container was killed, then make a new container.

Kill a Docker Container

docker kill container_nameStop a Docker Container

docker container stop container_idordocker container stop container_name